Predictive Insights for Seamless Travel

Predictive Modeling Techniques

Predictive modeling, a cornerstone of modern data analysis, utilizes various techniques to forecast future trends and outcomes. These techniques range from simple linear regression to complex machine learning algorithms like neural networks and support vector machines. The core principle is to identify patterns and relationships within historical data to inform future predictions. This allows organizations to proactively adapt to potential changes and make data-driven decisions.

Different modeling approaches have varying strengths and weaknesses. Choosing the right technique depends on the specific data characteristics and the desired level of accuracy. For instance, linear regression is suitable for straightforward relationships, while more sophisticated algorithms are necessary for complex, non-linear patterns.

Data Preparation and Feature Engineering

Accurate predictive modeling hinges on meticulous data preparation. This involves cleaning the data, handling missing values, and transforming variables into suitable formats for the chosen model. Effective data preparation is often a significant part of the entire modeling process, and it can dramatically impact the accuracy of the results. It is also crucial to engineer relevant features from the raw data to improve model performance.

Feature engineering is the process of creating new variables from existing ones. This can involve combining existing variables, extracting relevant information from text or images, or using domain expertise to identify key factors. This step often significantly improves the model's predictive power.

Model Evaluation and Validation

Evaluating the performance of a predictive model is paramount. Various metrics, such as accuracy, precision, recall, and F1-score, are used to assess how well the model generalizes to unseen data. Careful consideration of these metrics is vital for understanding the limitations and strengths of the model. Furthermore, techniques like cross-validation are employed to ensure the model's ability to perform well on new, unseen data.

Deployment and Monitoring

A critical aspect of predictive modeling is deploying the model into a production environment. This involves integrating the model into existing systems and processes to generate predictions in real-time. Implementing the model within the existing business workflow is often a complex but necessary part of the process. Continuous monitoring of the model's performance is essential to detect any drift in the underlying data distribution and ensure its continued accuracy.

Applications in Various Industries

Predictive insights are finding widespread applications across diverse industries. In finance, predictive models can forecast stock prices and assess credit risk. In healthcare, they can predict patient readmission rates and identify potential disease outbreaks. In retail, they can forecast demand and optimize inventory management. These are just a few examples of the transformative power of predictive modeling across numerous industries.

Ethical Considerations

The increasing reliance on predictive models necessitates careful consideration of ethical implications. Bias in the data used to train the model can lead to unfair or discriminatory outcomes. Ensuring fairness and transparency in the model's design and deployment is crucial to avoid unintended consequences. Furthermore, the privacy of individuals whose data is used to train the models must be protected.

Future Trends and Advancements

The field of predictive modeling is constantly evolving, with new techniques and algorithms emerging regularly. Advances in machine learning, particularly in deep learning and reinforcement learning, are opening new possibilities for more complex and accurate predictions. The ability to analyze vast amounts of data using sophisticated algorithms is rapidly changing how businesses operate. This leads to the development of more sophisticated models and a deeper understanding of complex phenomena.

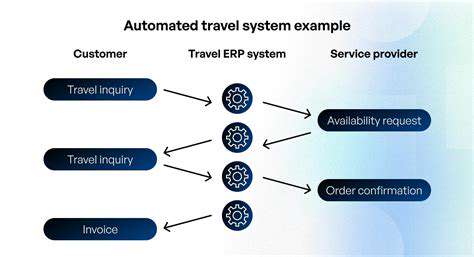

Seamless Integration and Effortless Management

Seamless Data Flow

Achieving seamless data flow is crucial for any modern application. It requires careful consideration of data structures, formats, and transfer mechanisms. Data should flow effortlessly between different components and systems, minimizing bottlenecks and maximizing efficiency. This smooth data exchange is paramount for responsiveness and user experience. Furthermore, a well-designed data pipeline will ensure that data is accessible and usable by various parts of the application, promoting flexibility and scalability.

Robust data validation and transformation processes are essential to maintain data integrity throughout the pipeline. This ensures that data is accurate, consistent, and reliable, preventing errors that can cascade through the application. Proper error handling and logging are also critical to pinpoint issues and facilitate efficient troubleshooting.

Intuitive User Interface

A user-friendly interface is paramount in ensuring a positive user experience. Clear and concise design elements, alongside intuitive navigation, are essential to guide users effortlessly through the application. This includes well-organized menus, clear instructions, and helpful prompts, all contributing to a smooth and enjoyable user experience.

Accessibility features are vital for inclusivity. This involves making the application usable by users with diverse needs and abilities. Simple adjustments such as keyboard navigation and alternative text for images greatly enhance accessibility for a wider audience. Providing clear and concise instructions, especially for complex tasks, is also crucial for user experience.

Efficient Resource Management

Efficient resource management is crucial for the stability and performance of any application. This involves optimizing memory usage, minimizing CPU load, and controlling network traffic. Careful planning and implementation of resource allocation strategies are essential for preventing performance bottlenecks and ensuring that the application performs optimally under varying loads. This also includes proper handling of temporary files and data structures to prevent memory leaks.

Scalability is another important aspect of resource management. The application should be able to handle increasing demands without significant performance degradation. This requires a design that can adapt to varying workloads and accommodate future growth. Effective resource management principles are critical for long-term sustainability and maintainability of the application.

Robust Security Measures

Robust security measures are paramount to protect sensitive data and prevent unauthorized access. Implementing encryption protocols, access control mechanisms, and regular security audits is essential to maintain data integrity and confidentiality. These measures are vital in protecting user data from potential threats and ensuring the application's trustworthiness. These measures should also include regular vulnerability assessments to proactively identify and address potential security risks.

Regular security updates and patches are critical to address emerging vulnerabilities. Implementing strong authentication methods, such as multi-factor authentication, enhances security further. Security measures should be thoroughly tested to validate their effectiveness and identify any potential loopholes. A comprehensive security strategy is essential for maintaining a secure and trustworthy application environment.

Scalability and Maintainability

The ability to scale the application to accommodate future growth and changing business requirements is essential. This involves designing the application with scalability in mind, using modular components, and leveraging cloud-based resources. A modular architecture promotes adaptability and allows for easier future modification and maintenance.

Maintainability is crucial for long-term success. A well-documented and organized codebase, along with clear separation of concerns, will reduce development time in the future. A maintainable system will minimize the time and resources needed for future updates and improvements. This includes clear documentation, well-defined interfaces, and a consistent coding style.

Performance Optimization

Optimizing the application for performance is critical for a positive user experience. This involves identifying and addressing performance bottlenecks, optimizing database queries, and leveraging caching mechanisms to reduce response times. Performance optimization should be a continuous process, not just a one-time effort. Regular performance monitoring and analysis are crucial for identifying areas for improvement and ensuring optimal application responsiveness.

Utilizing efficient algorithms, minimizing network calls, and strategically using caching are essential aspects of performance optimization. Efficient code optimization plays a significant role in enhancing overall performance and user satisfaction. Proper resource management and handling of large datasets are key components in ensuring a smooth and seamless user experience.